<LARS>: Light Augmented Reality System

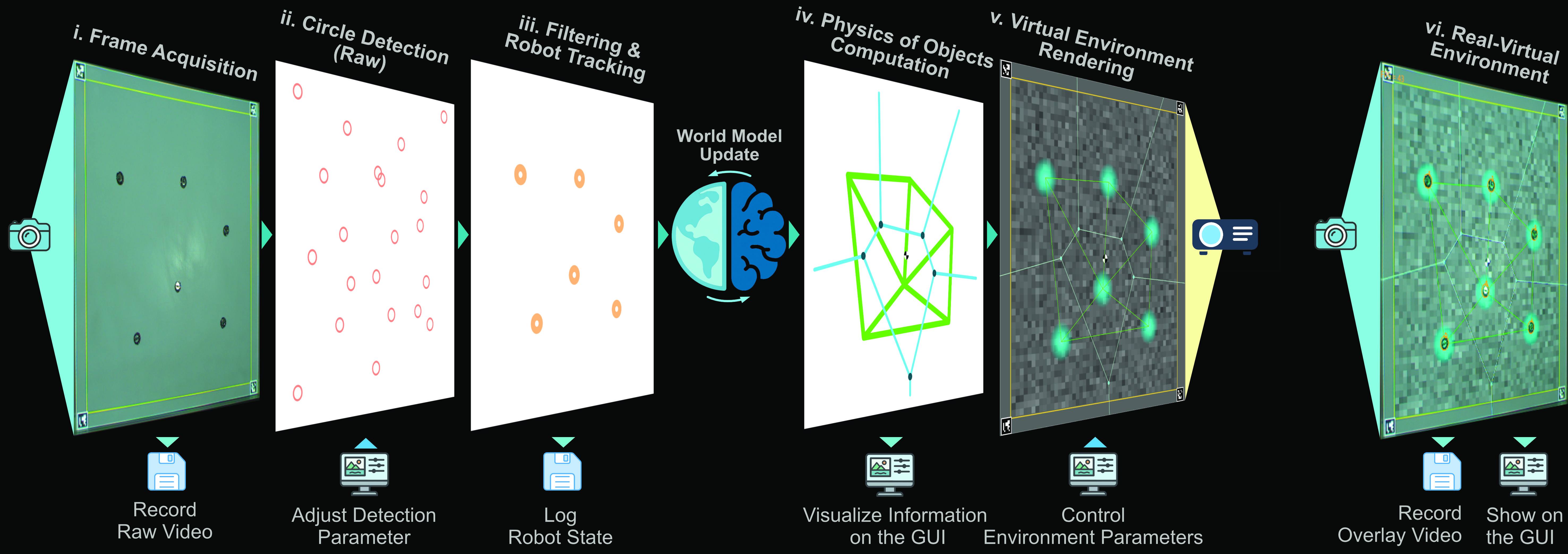

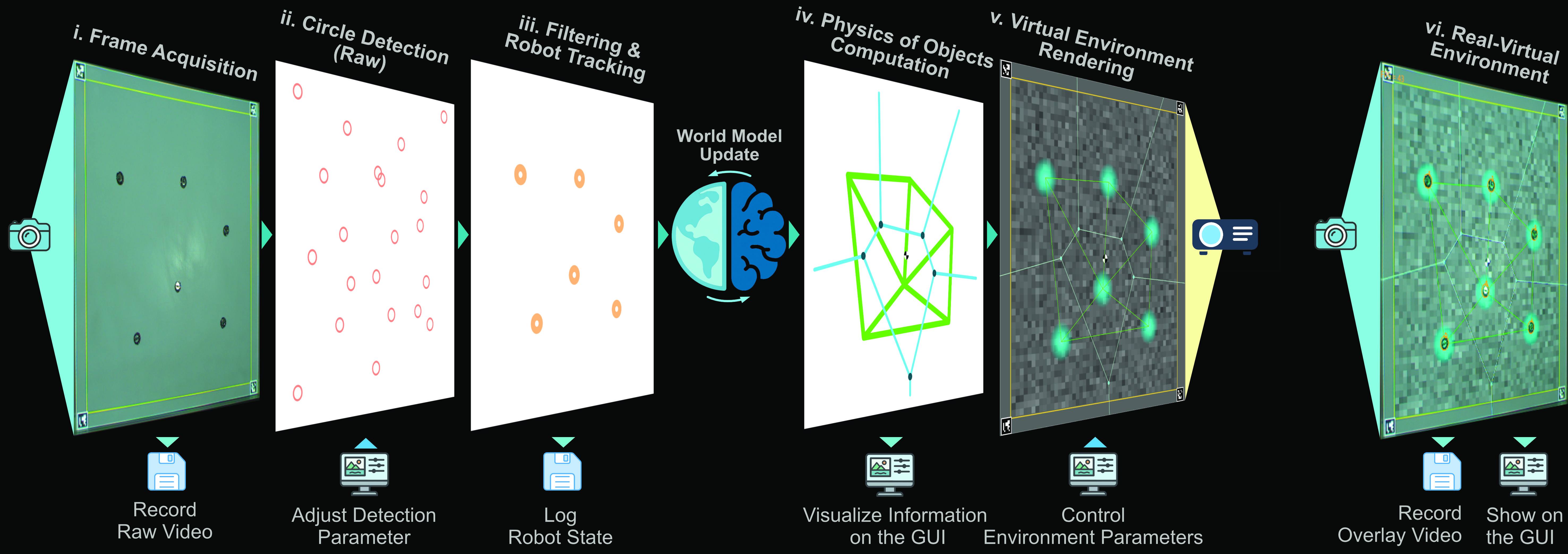

Overview

LARS (Light Augmented Reality System) is a standalone framework engineered to provide seamless interaction between physical collectives and virtual environments. As an end-to-end pipeline, it integrates high-speed detection, real-time tracking, and dynamic projection into a single, cohesive architecture. Built on a robust Model-View-Controller (MVC) pattern, the system enables researchers to bypass complex hardware setups and jump straight into experimentation. The core of LARS lies in its marker-free tracking engine.

Functional Summary

LARS (Light Augmented Reality System) is a cross-platform, open-source framework that leverages Extended Reality (XR) to seamlessly merge physical and virtual worlds. It projects dynamic visual objects—gradients, trails, and fields—directly into the environment to enable Stigmergy (indirect communication) between robots.

Closed-Loop Interaction: Robots Reacting to Virtual Pheromones

Marker-Free Tracking

Based on the ARK algorithm, LARS provides robust, real-time tracking for 100+ robots without tags or hardware modifications.

Virtual Stigmergy

Turns “invisible” collective dynamics into tangible experiences by projecting virtual pheromones that robots can sense and modify.

Key Capability

Enables Closed-Loop Interaction where physical robots react to projected virtual objects, and virtual agents react to physical obstacles, bridging the reality gap.

Why LARS?

Reproducibility

Standardizes experimental setups by replacing physical arenas with projected, controllable virtual environments.

Education

Makes abstract collective behaviors observable and interactive for students and public engagement.

Flexibility

Supports diverse platforms (Kilobots, Thymio, e-puck) with no hardware modifications required.

Example Scenarios

Collective Decision-Making

Visually tracking 100+ robots as they reach consensus in a noisy environment. LARS projects options and visualizes the group’s “choice” in real-time.

Interactive Swarm Demos

Allowing visitors to “steer” a swarm using hand gestures or projected cues, demonstrating complex systems concepts like self-organization and emergence.

MVC Architecture

LARS follows a classic Model-View-Controller pattern to ensure modularity and real-time performance.

Technical Stack & Skills